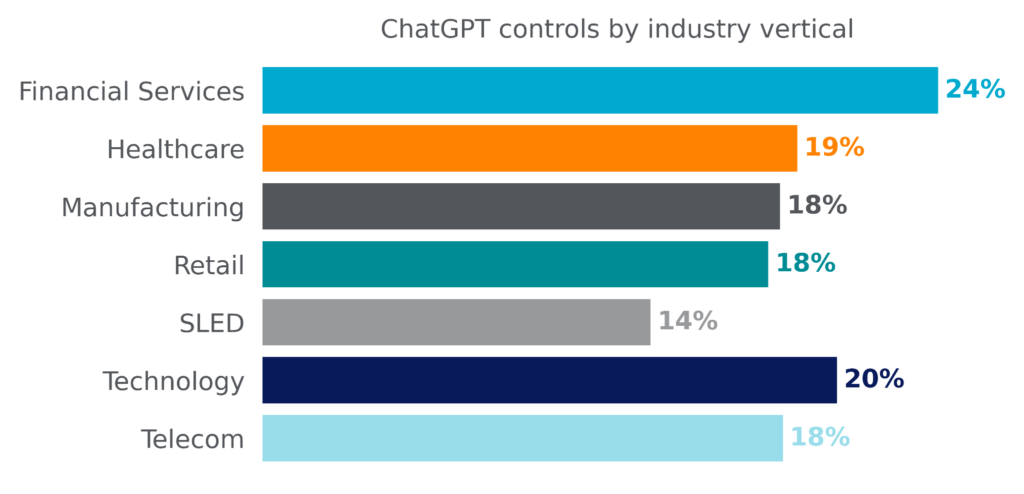

ChatGPT use is increasing exponentially in the enterprise, where users are submitting sensitive information to the chat bot, including proprietary source code, passwords and keys, intellectual property, and regulated data. In response, organizations have put controls in place to limit the use of ChatGPT. Financial services leads the pack, with nearly one in four organizations implementing controls around ChatGPT. This blog post explores which types of controls have been implemented in various industries.

Controlling ChatGPT

ChatGPT controls are most popular in the financial services sector, where nearly one in four organizations have implemented controls around its use. Unsurprisingly, ChatGPT adoption is also lowest in financial services, indicating that the controls put in place there were largely preventative, blocking users from the platform.

Interestingly, ChatGPT controls are in place in one in five technology organizations, putting it in second place. However, these controls have not had the same effect: ChatGPT adoption is highest in technology organizations. How could this be true? The answer lies in the types of controls that have been implemented.

Types of ChatGPT Controls

This post explores four different types of controls that organizations have implemented to monitor and control ChatGPT use.

Alert policies

Alert policies are informational controls. Their purpose is solely to provide visibility into how users in the organization are interacting with ChatGPT. Often, they are coupled with DLP policies to provide visibility into potentially sensitive data being posted to ChatGPT. Alert policies are often used during a learning phase to explore the effectiveness and impact of a blocking control, and are commonly converted to block policies after they have been tuned and tested.

User coaching policies

User coaching policies provide context to the users who trigger them, empowering the user to decide whether or not they wish to continue. User coaching policies are often coupled with DLP policies, where they can be used to notify the user that the data they are posted to ChatGPT appears to be sensitive in nature. The user is typically reminded of company policy and asked whether or not they wish to continue. For example, if the company policy is not to upload proprietary source code to ChatGPT, but the user is uploading some open source code, they may opt to continue with the submission after the notification. For ChatGPT user coaching policies, users click proceed 57% of the time.

DLP policies

DLP policies provide organizations to allow access to ChatGPT, but control the posting of sensitive data in prompts. DLP policies are configured by the organization to trigger on every post to ChatGPT and inspect the content of the post before allowing it through. The most common policies focus on proprietary source code, passwords and keys, intellectual property, and regulated data.

Block policies

The most draconian type of policy is a policy that outright blocks all use of ChatGPT. Users are not allowed any interaction with the app: they cannot login, post prompts, or sometimes even visit the login page itself.

Popularity of ChatGPT Controls

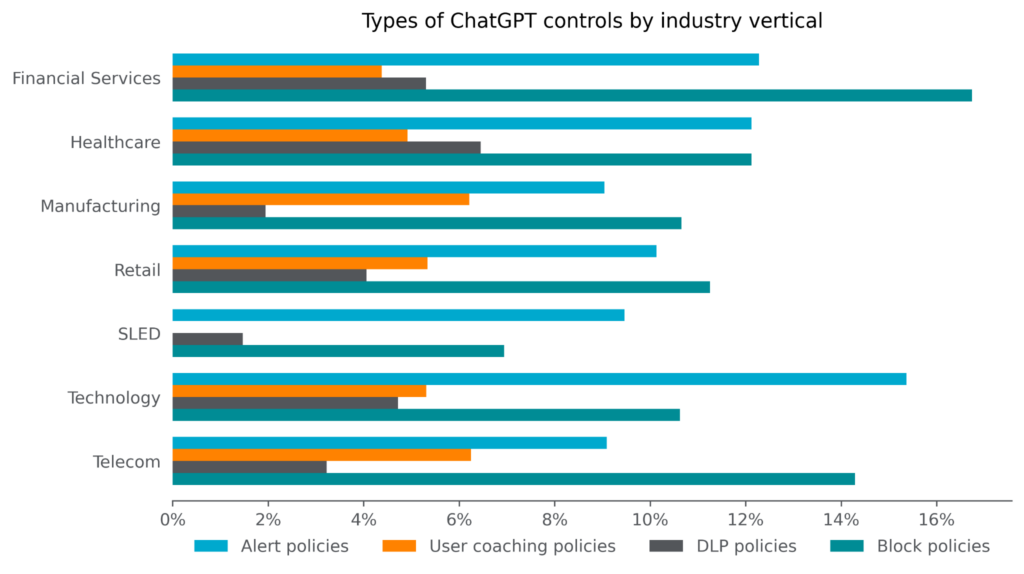

The following chart shows which percentage of organizations in each of the seven verticals have implemented each of the control types. Financial services leads in terms of block policies, where nearly 17% of organizations outright block ChatGPT. DLP policies controlling exactly what can be posted to ChatGPT are also most popular among financial services and healthcare, both highly regulated industries. State, local government, and educational institutions (SLED) have the lowest overall adoption of ChatGPT controls and also the lowest adoption of user coaching policies, DLP policies, and block policies.

So how is it that technology companies lead in terms of ChatGPT usage and are second in terms of ChatGPT controls? The types of controls they implement are generally less draconian than other organizations. Technology organizations lead for alert only policies designed to provide visibility only, and only SLED has a lower percentage of organizations that enact block policies. Technology organizations use DLP and user coaching policies at similar rates to other verticals. In summary, what this means is that technology organizations aim to enable their users access to ChatGPT, while closely monitoring its use and implementing targeted controls where appropriate.

Safely enabling ChatGPT

Netskope customers can safely enable the use of ChatGPT with application access control, real-time user coaching, and data protection.

About this blog post

Netskope provides threat and data protection to millions of users worldwide. Information presented in this blog post is based on anonymized usage data collected by the Netskope Security Cloud platform relating to a subset of Netskope customers with prior authorization. Stats are based on the period starting on May 8, 2023 through June 4, 2023. Stats are a reflection of both user behavior and organization policy.

Back

Back

ブログを読む

ブログを読む